Introduction:

In SAS Data Quality project implementations, the client may have a requirement of SAS Data Quality output to be used by their downstream applications in REST compliant format.

In such a project implemented, there was a specific requirement to expose clustering attribute match-codes in REST compliant format. A custom search application built by the client was used to pass data with key clustering attributes as a request to the SAS Data quality engine and response to corresponding match-codes for the attributes was generated. This output was further used to query the customer clusters generated and the matching records were returned to their custom search portal main screen.

The requirement for SAS DataFlux Data Management Job-related REST APIs would vary from customer to customer. This Blog will be helpful for people to configure the REST API in SAS Data Quality implementations.

REST API Overview:

Representational State Transfer (REST) is an architectural style or pattern for designing web services that access the system’s resources using HTTP methods. The resources are accessed using Uniform Resource Identifier (URI) paths.

Three REST Application Programming Interfaces (APIs) are included with DataFlux Data Management Server. These data management APIs are summarized in the following table:

| Execution Mode | Description | Associated API |

|---|---|---|

| Real-time | The input is passed in the request and the output is immediately returned in the response (as soon as the service job finishes). | There are two types of real-time service job flows: data and process. Each has its own functionality, media types, and resources) very similar.

|

| Batch |

|

|

DataFlux Data Management Real-Time Data Job REST API

DataFlux Data Management Real-Time Data Job REST API is used to interact with (manage and execute) real-time data services on DataFlux Data Management Server.

This API allows clients to perform the following tasks:

- List, upload, download, update, or delete service job flows on the server.

- Retrieve inputs and outputs of the metadata of individual service job flows.

- Execute individual service jobs by passing them input data and retrieving back the output data.

A real-time service is a job flow that is executed in a real-time service mode, where the request contains input data, and the response contains output data. Real-time services are used for very quick job executions, where the client is waiting on the output data on the same connection (so it is more like “near-real-time”)

This API is an alternative to the older SOAP API of DataFlux Data Management Server. This REST API is designed to expose this pre-existing functionality in a REST complaint way.

Steps to deploy Data Job as a Real-time Service

Create Data Job in Data Management Studio (DMS)

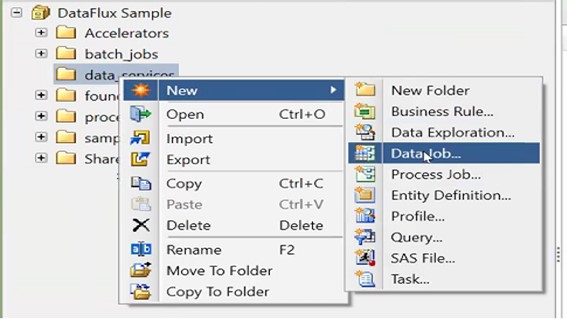

- Logon to DMS -> Select the Folder tab -> Expand the required repository -> Right-click on the data services folder and select New ->Data Job…

- Enter the job name and click ok.

Figure 1: Selecting Data Job

Figure 2: Using External Data Provider

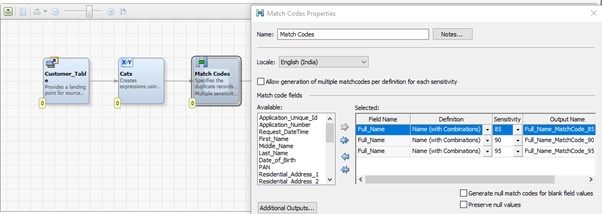

Note: – In this document the example is to generate match codes for the input parameters. Other nodes can be configured as required based on the business requirement.

Figure 3: Generating Match Codes

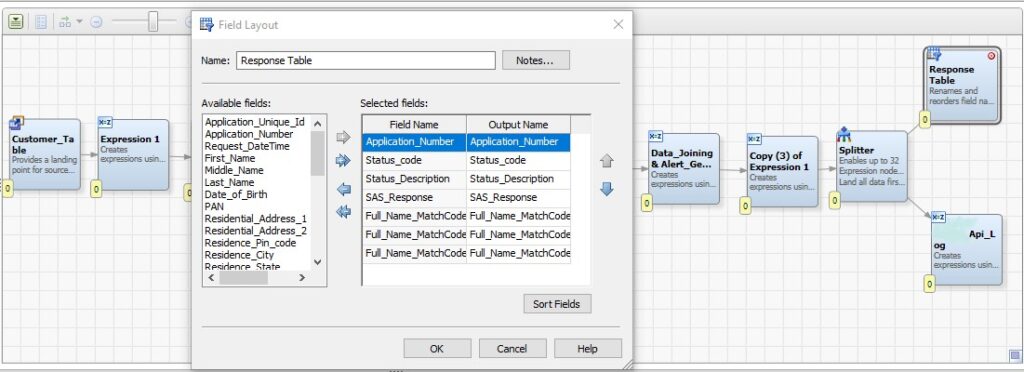

Figure 4: Field Layout node for Response

Figure 5: Final Job

Register Data Job as a Real Time Data Service

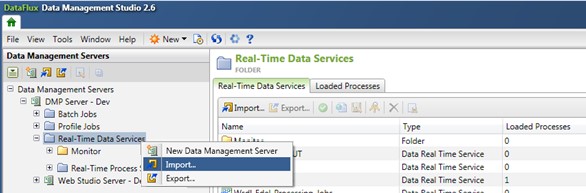

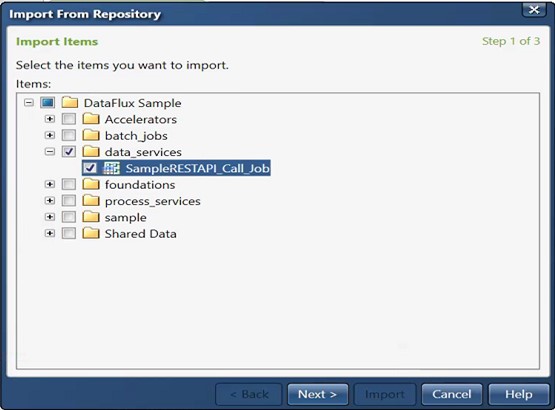

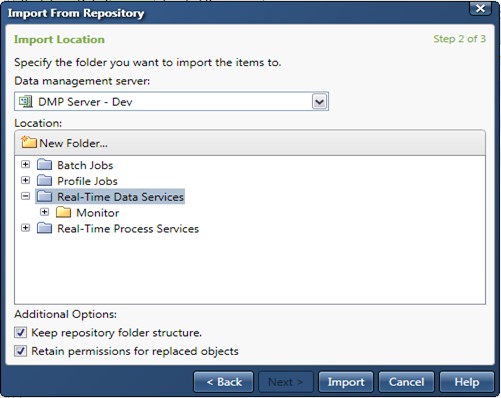

Figure 6: Import as Real-Time Data Services

Figure 7: Navigate to Repository

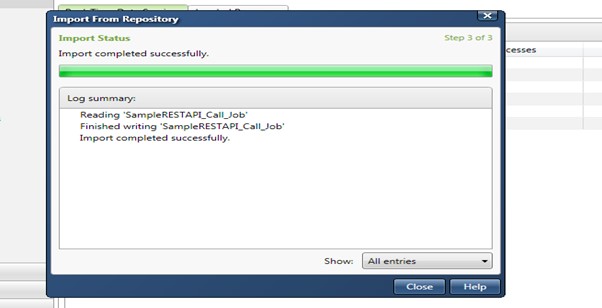

Figure 8: Import From Repository

Figure 9: Import Status

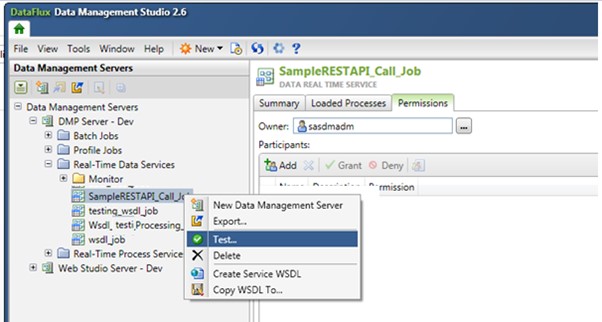

Test the Real-Time Data Service

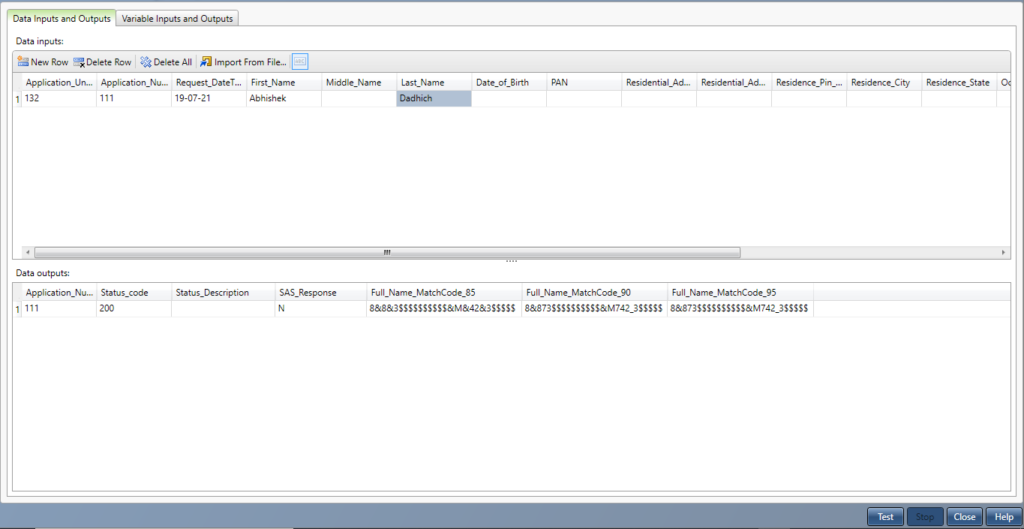

Figure 10: Job Testing

- Click on the New Row option -> enter the parameter values as required->Click on Test.

- The generated match-code output columns will be displayed.

Figure 11: Input and Output of Tesing

REST API Related Tasks

Access the REST API URLs

The Data Management Server job or service name is referred to by its ID which is simply the name of the job or service (with its extension) Base64 encoded. Base64 encoding is a common standard used to encode paths or other resources names in any URL. For example, without Base 64 encoding a filename and path that contains a space can cause problems. For further tasks related to REST API the REST URL needs to be accessed.

- Click on the below link to access the REST details. Enter the credentials when prompted.

Note:– Please note a sample server name has been used in this document. The respective server details will have to given in the http URL. The port number may be different based on the configuration done at the time of the installation.

http://SASDataMgmtRTDataJob/rest/jobFlow

Test the REST API

There are many APIs that can be used for testing the REST API generated. POSTMAN is one of the tools we can use to test API.

For first-time access, the Authorization details need to be entered. Select the Basic Auth as type and enter the relevant credentials for the DM server.

Once Authorization details are completed click on the Send icon and server details will be displayed. You can put server ID and hit POST to check REST API with the job you have created.

Comments (2)

Obila Doe

Our infrastructure management approach is holistic, addressing capacity monitoring, data storage, network utilisation, asset lifecycles, software patching, wired and wireless networking and more.

James Weighell

A hosted desktop solution allows for the delivery of a consistent and scalable IT experience for all users in an organisation. With this solution, users gain access via a desktop icon or link.